How to build production-ready AI systems with event-driven architecture

Why most AI apps break in production

Most AI features start simple.

You call a model API. You wait for the response. You return it to the frontend.

"It works, until it doesn't."

As soon as AI becomes a real product feature, new requirements appear:

- You need to validate output before showing it.

- You need to enrich it with database data.

- You need to trigger side effects.

- You need retries and timeouts.

- You need observability.

- You need real-time updates without blocking requests.

At that point, a synchronous AI call is no longer enough.

You need a system.

And that system needs to be event-driven.

The problem with synchronous AI

The typical architecture looks like this:

Frontend → Backend → LLM → Response → Done

The backend waits for the AI. The frontend waits for the backend. Everything is tightly coupled.

AI calls are external and unpredictable. Your business logic is internal and stateful.

Treating both as a single blocking request creates architectural friction.

What is event-driven AI?

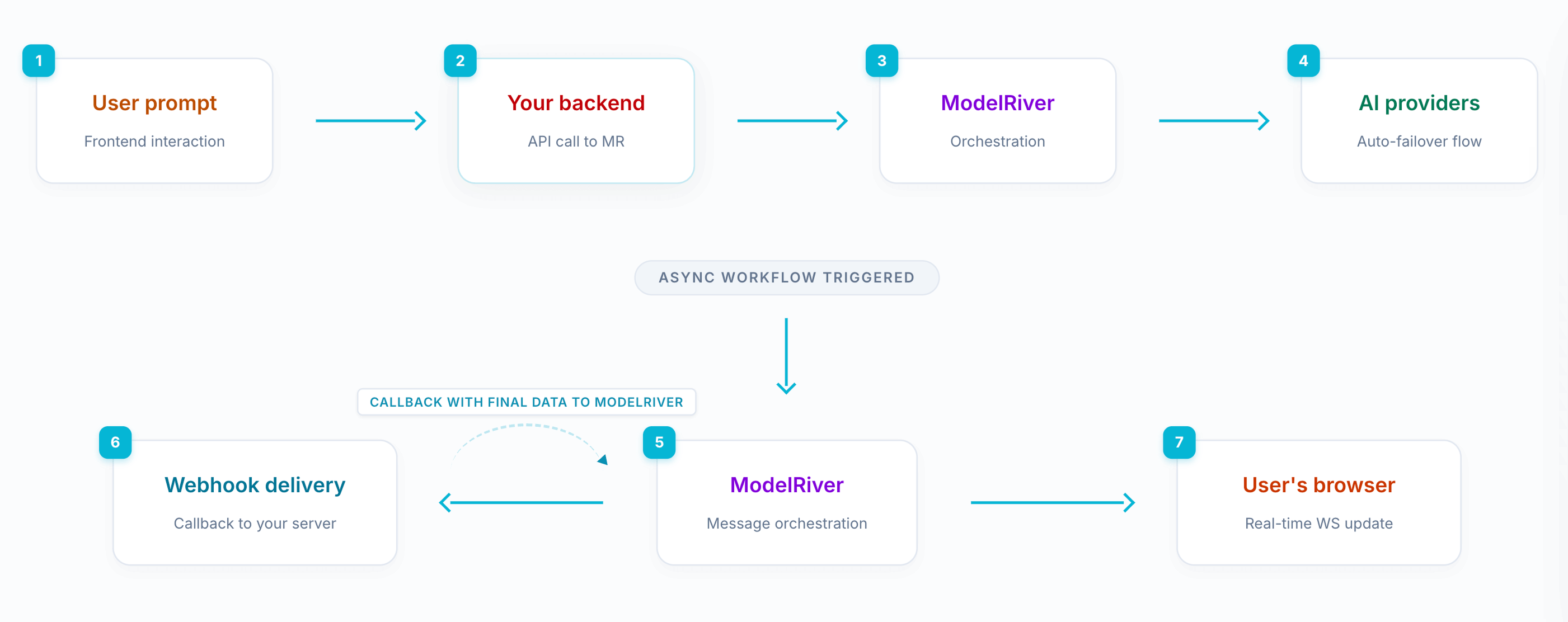

Event-driven AI decouples AI generation from response delivery.

Instead of waiting synchronously for an AI response, you:

- Fire an async request.

- Let ModelRiver process the AI in the background.

- Receive the result via webhook.

- Run custom backend logic.

- Send the enriched data back using a callback URL.

- Stream the final result to the frontend in real time.

The system becomes a lifecycle, not a function call.

Yes --- webhooks add moving parts. We handle retries, verification, and observability so you don't have to.

A realistic failure example

Imagine the AI returns malformed JSON.

Your backend validates the structured output and rejects it.

Instead of:

- Crashing the request

- Sending corrupted data

- Leaving the user hanging

The lifecycle becomes:

- AI generation completes.

- Backend validation fails.

- Backend sends structured error via callback.

- WebSocket broadcasts a friendly error message to the user.

The user sees a clean failure state --- not a broken UI.

That is architectural resilience.

The three-step event-driven flow

Each part has a clear responsibility:

- AI generation

- Backend processing

- Real-time delivery

They are no longer tightly coupled.

Why event-driven architecture matters

Non-blocking by design

Frontend requests never wait for:

- AI execution

- Backend processing

- External API calls

Everything is asynchronous.

Custom logic before delivery

You control what users see.

- Validate

- Enrich

- Transform

- Store

- Reject

Before the result reaches the UI.

Reliability and retries

Event-driven workflows allow:

- Webhook retries

- Timeout handling

- Failure visibility

- Safe callback confirmation

You gain reliability without complex glue code.

Full observability

Every step is logged:

- AI request

- Webhook delivery

- Backend callback

- Final broadcast

Instead of guessing where a failure happened, you see exactly which lifecycle step failed.

When to stay synchronous

Event-driven architecture is powerful --- but not always necessary.

You may stay synchronous if:

| Scenario | Why synchronous is fine |

|---|---|

| Prototypes | Speed matters more than architecture |

| < 3s latency critical flows | Extra lifecycle steps may add overhead |

| Simple RAG chat | No backend enrichment required |

| Low traffic apps | Complexity may not justify async orchestration |

Start simple. Evolve when production demands it.

Event-driven vs streaming + background jobs

Some teams attempt to solve production issues by:

- Streaming tokens directly to the frontend

- Running background jobs for persistence

That works --- to a point.

But it often leaves gaps:

- No unified lifecycle

- Harder retry coordination

- Limited observability

- Scattered failure handling

Event-driven architecture centralizes:

- Execution

- Processing

- Delivery

- Visibility

Instead of patching reliability onto streaming, it builds reliability into the flow itself.

The bigger architectural shift

AI is not just inference.

It is:

- Workflow orchestration

- State management

- External dependency handling

- Cost-sensitive execution

- Real-time distribution

Once you model AI as an event-driven lifecycle instead of a blocking function call, your system becomes modular instead of fragile.

That shift is what separates demo AI from production AI.

Next steps

- https://modelriver.com/docs/event-driven-ai

- https://modelriver.com/docs/webhooks

- https://modelriver.com/docs/client-sdk

- https://modelriver.com/docs/observability/timeline

- https://modelriver.com/docs/api/endpoints

If you're building AI-powered products in production, event-driven architecture gives you control, reliability, and real-time delivery without unnecessary complexity.